- startActivity执行的时候跨进程了吗?

- 如果是跨进程,那么是于哪个进程通信呢?

- service, broadcast这些呢?

binder的概念

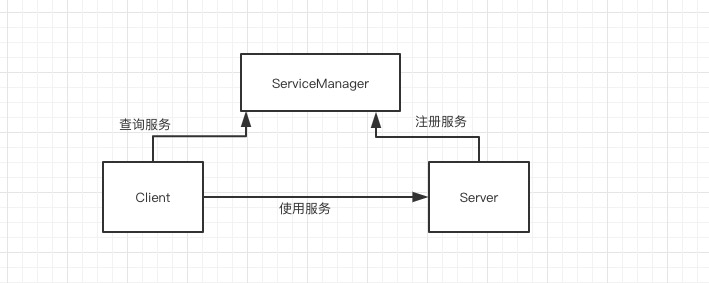

- Binder是android提供的一种IPC机制,Binder通信机制类似于C/S架构,除了C/S架构外,还有一个管理全局的ServiceManager

穿梭于各个进程之间的binder

servicemanager 的作用是什么?

servicemanger 是如何告知binder驱动它是binder机制的上下文管理者?

MediaServer作为例子

MediaServer包括以下这些服务:

- AudioFlinger

- AudioPolicService

- MediaPlayerService

- CameraService

1 |

|

1 |

|

- ProcessState的构造函数

1 |

|

- ProcessState做了什么?总结一下:

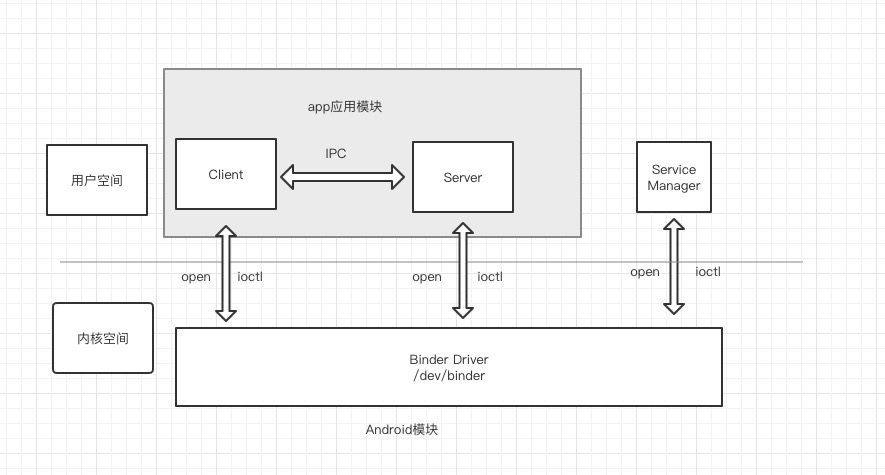

- 打开/dev/binder设备,相当于与内核的Binder驱动有了交互的通道(ioctl)。

- mmap为Binder驱动分配一块内存来接收数据。

- ProcessState具有唯一性,因此一个进程只会打开一次设备

1 |

|

1 |

|

1 |

|

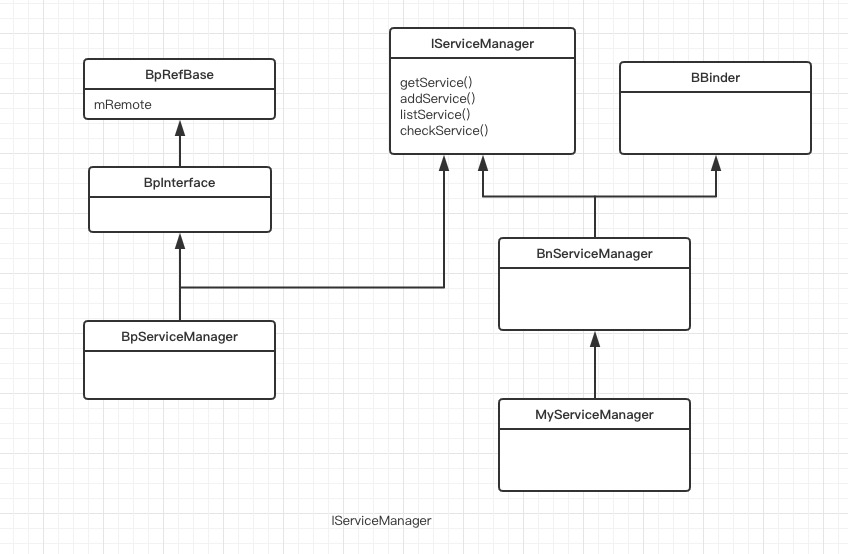

这里引出了一个BpBinder的概念,BpBinder和BBinder都是IBinder类派生而来,BpBinder和BBinder是一一对应的,上面创建了BpBinder,对应的BBinder会找对应的handle等于0的BBinder, 在android系统中handle=0的是ServiceManager

看得出interface_cast

1 |

|

模板函数,所以interface_cast

1 |

|

所以回过头来再看IServiceManager.h这个类

1 |

|

这个宏的定义在IInterface.h文件中

1 |

|

我们将ServiceManager对宏定义替换下,得到如下:

1 |

|

IServiceManager对IMPLEMENT_META_INTERFACE宏的使用如下:

1 |

|

对宏定义展开便是如下所示:

1 |

|

从上面的代码上可以看出asInterface得到的是一个BpServiceManager对象。简单总结一下:上面传递new BpBinder(0)作为参数,通过IServiceManager::asInterface()方法得到一个BpServiceManagerr对象。

BpServiceManager又是什么鬼?

来看下BpServiceManager的源码,还是在IServiceManager.cpp类中

1 |

|

从下面转换的代码中可以看出remote就是BpBinder

1 |

|

因此BpServiceManager实现IServiceManager的业务函数,而BpBinder作为通信对象。

总结:defaultServiceManager()实际上像是初始化了BpServiceManager对象,以及建立了以BpBinder作为通信参数的通道.

接下来继续分析MediaPlayerService.cpp

1 |

|

那么就看下BpServiceManager.addService()业务方法

1 |

|

接下来再看下BpBinder::transact()方法

1 |

|

1 |

|

IPCThreadState的构造函数

1 |

|

ok, 以上获得了IPCThreadState对象,下一步看transact()方法

1 |

|

继续分析writeTransactionData()方法

1 |

|

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

1 |

|

来看下talkWithDriver()方法

1 |

|

再看看status_t IPCThreadState::executeCommand(int32_t cmd)方法

1 |

|

总结一下: 上面MediaPlayerService::instantiate();大概执行的是通过ioctl与Binder通信,Binder通过handle通知到ServiceManager

再来看下线程池的内容startThreadPool

1 |

|

1 |

|

再来看下PoolThread函数

1 |

|

又回到了joinThreadPool

1 |

|

这个子线程还是通过talkWithDriver,也就是ioctl与binder想建立通信,所以看得出mediaPlayerService除了自己主线程joinThreadPool读取binder设备外,还通过startThreadPool新启动了一个线程读取Binder设备。

Binder是通信机制,BpBinder,BpServiceManager…这些是业务,要把这些区分开,才便于理解,Binder之所以难于理解,就在于层层封装,巧妙的把通信与业务融合在一起。

上面提到,defaultServiceManager()实际上像是初始化了BpServiceManager对象,以及建立了以BpBinder作为通信参数的通道。传递的handle为0,表示的是ServiceManager。 那么来看下ServiceManager是如何处理请求的。

service_manager.c的源代码

1 |

|

1 |

|

binder_become_context_manager(bs)也要看看

1 |

|

最后还在看看binder_loop(bs, svcmgr_handler);

1 |

|

继续看binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func)方法

1 |

|

最终交给的是func来处理,func是一个函数指针,从main()方法中传递的是svcmgr_handler

1 |

|

来看下do_add_service(bs, s, len, handle, txn->sender_euid, allow_isolated, dumpsys_priority,txn->sender_pid)的请求

1 |

|

还是简单看看svc_can_register(s, len, spid, uid)这个方法把

1 |

|

总结:从上面来看,ServiceManger打开了binder设备,通过ioctl与binder驱动通信,保存了addService信息。

- 集中管理系统内到所有到服务,施加权限控制。

- ServiceManager支持通过字符串来查找对应到Service。

- Client只需要通过查询ServiceManager,就可以知道对应到Server是否存活与通信,这个非常的方便。

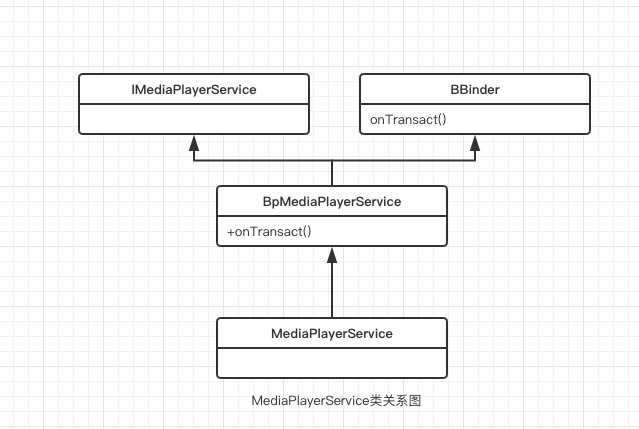

上面分析了ServiceManager和他的client,接下来从业务的角度来看,MediaPlayerService通信层如何与client端交互的。

一个client想得到某个Service的信息,就必须与ServiceManager打交道,通过getService方法来获取Service信息,来看下IMediaDeathNotifier::getMediaPlayerService()的源码

1 |

|

有了BpMediaPlayerService,就能够使用任何IMediaPlayerService提供的业务函数,像createMediaRecorder,createMetadataRetriever等。这些方法都调用了remote()->transact(),把数据打包交给了binder驱动,说明都是调用了BpBinder,通过对应都handle找到对应都客户端。

上面都分析中,MediaPlayerService在MediaServer进程中,这个进程有两个线程在talkWithDriver。假设其中一个线程收到消息时,最终会调用executeCommand()方法

1 |

|

从类图关系中可以看出,BnMediaPlayerService实现了onTransact()方法

1 |

|

看出来了吧,BnServicexxx就是与client通信的业务逻辑

来个demo,纯native实现c/s框架

aidl demo